I wanted to talk about why I chose iWARP as my RDMA transport rather than RoCE. First of all I’m a big fan of always using the open standard to any networking protocol rather than the closed source version. IWARP is an IETF defined standard, whereas RoCE is not. IWARP uses TCP as the transport protocol for its payload whereas RoCEv2 uses UDP. Originally RoCE was a different payload altogether and could not be routed (because there was no IP headers around the payload). If a router decapsulates a frame, and doesn’t see a header it recognizes, it will probably just drop it. Previously only iWARP was able to be routed across networks. Although I can imagine the need for it, in most implementations (like mine) that would be avoided. I also like that the transport protocol for iWARP is TCP, just like iSCSI. TCP inherently is lossless by re-transmitting any segment that was not acknowledged. Since RoCEv2 uses UDP as the transport protocol, there is no re-transmission or guaranteed delivery.

RoCEv2 ,which I will now just call RoCE, requires L2 functions to provide the lossless feature set. It does this by utilizing priority flow-control. Which basically let’s segments of a L2 link send pause messages when a NIC buffer is about to be full. Thus preventing tail-drop. Although regular flow-control could be used RoCE nics are meant to be converged. Meaning you will have multiple traffic types passing over the same network infrastructure. Thus you could have VM and storage traffic on the same set of NICs. After all its in the name, RDMA over Converged Ethernet. The whole point of using Ethernet for all of this is to converge it and cut costs.

If you’re interested about Storage Technologies check out our NMVE SSD reviews.

Back to the point about flow-control. Regular flow-control will pause a whole interface, not a traffic class. This can cause blocking of ports and unwanted latency when we have a converged infrastructure. Priority flow-control fixes this issue. There are also other L2 features at play here but the jist of the conversation is usually priority flow control providing loss-less ethernet via pause frames for traffic classes. The problem I have with this is not only do your NICs need to support these features, but so do your switches. This means no cheap 10Gbe cut-through switches for your storage network. iWARP does not have this limitation, it can run on any type of switch as long as it can carry ethernet. Now I know TCP headers are a minimum of 20 bytes while UDP headers are 8 bytes, but it’s the built-in lossless feature that I like. RDMA in general is supposed to have latency very close to infiniband, without the cost of infiniband. TCP’s only real enemy is latency. Latency on TCP connections can limit bandwidth (read about bandwidth delay product). For intra-switch traffic we can expect low latency (thanks to RDMA and cut-through switches), thus fast and reliable storage.

Its important to mention that both implementations offer lower cpu utilization. The whole point of RDMA is to offload everything to the NIC. With iWARP the TCP/IP stack is offloaded to the NIC thus no interruptions are made on my hosts. During periods of peak utilization you will see 5% CPU usage from the system rather than 20-30% from each end of the connection. This is especially important in VM hosts that are accessing storage over the network and are not directly attached. If I can save 20% CPU utilization on my host, I can not make room for more VMs!

Server 2012 R2 offers a feature that can utilize RDMA to transport SMB. This is called SMB Direct. This is the feature that I am utilizing with SMB 3.0

How to verify RDMA:

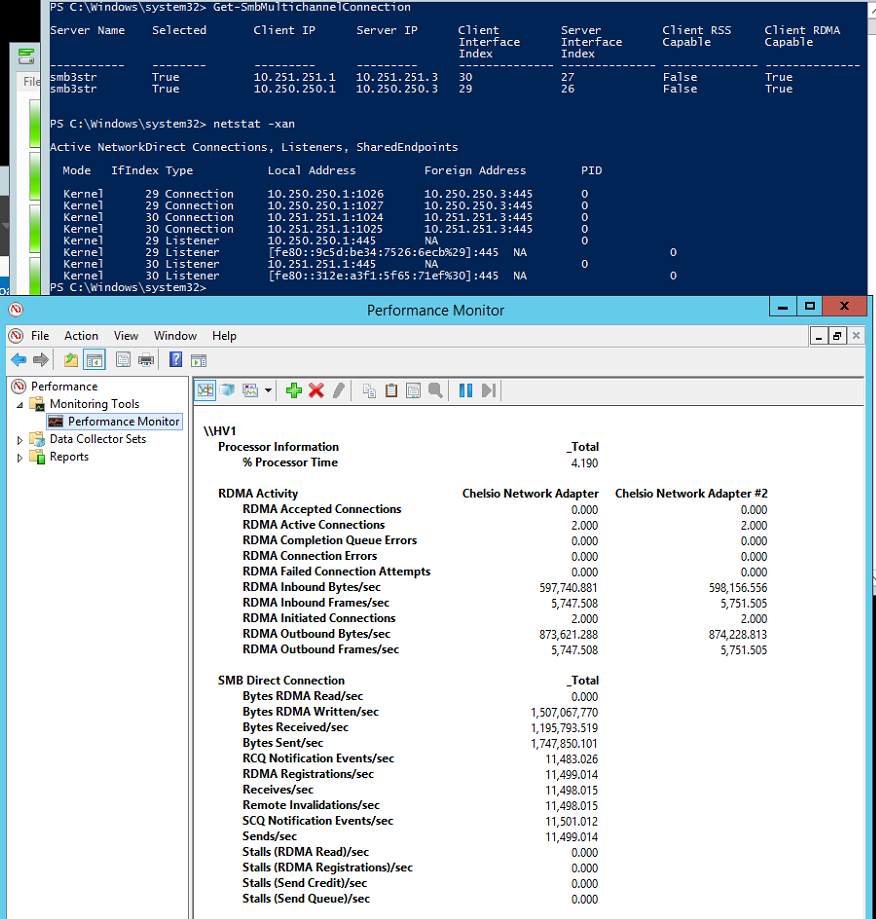

Get-smbmultichannelconnection (powershell) allows us to check the active paths for SMB and whether they are RSS or RDMA capable.

netstat -xan (CMD) allows us to see that the connection is from the kernel and not our TCP/IP stack (offloaded).

Finally the performance counters for CPU, RDMA, and SMB Direct to verify that data is passing through.

What’s interesting to note is that in taskmgr you will see the traffic stats of RDMA when using iWARP/Chelsio. However when using RoCE/Mellanox you only get to see the stats via performance counters.

The Chelsio T420’s are the oldest nics capable of RDMA on Server 2012 R2. Although the T320’s say they are RDMA capable, they do not work for 2012 R2. Save yourself the headache.

Here is the vendor that I purchased mine from.

http://www.ebay.com/itm/152244261154?_trksid=p2060353.m2749.l2649&ssPageName=STRK%3AMEBIDX%3AIT